|

Currently, I am a Principal Research Scientist at Adobe Research. My research focuses on combining computer graphics, vision, and machine learning to make it faster and more fun to complete creative tasks. I received my PhD from Stanford University supported by a Hertz Fellowship where I worked with Pat Hanrahan. I completed my undergraduate degree at Caltech working with Mathieu Desbrun. During a postdoc at Stanford, I have also worked on the data analytics team at Khan Academy. |

Technology Transfers

I've helped developed a lot of different technologies that have shipped across a wide range of Adobe products.

#ProjectTurntable | Adobe MAX Sneaks 2024

I Tried Adobe Illustrator’s New Turntable Feature… and WOW!

Rotating a 2D illustration to a different angle typically requires redrawing the entire image from scratch. Turntable allows you to take a flat vector object and synthesize what it would look like from different viewing angles, letting artists smoothly slide through a wide range of rotations. The system intelligently infers hidden geometry: if your character only shows two legs, Turntable figures out where the other two should be. Artists can extract any generated view for further editing or use the outputs as high-quality references to manually trace from. This is particularly powerful for character turnarounds, product visualization, and logo exploration where seeing multiple perspectives accelerates ideation.

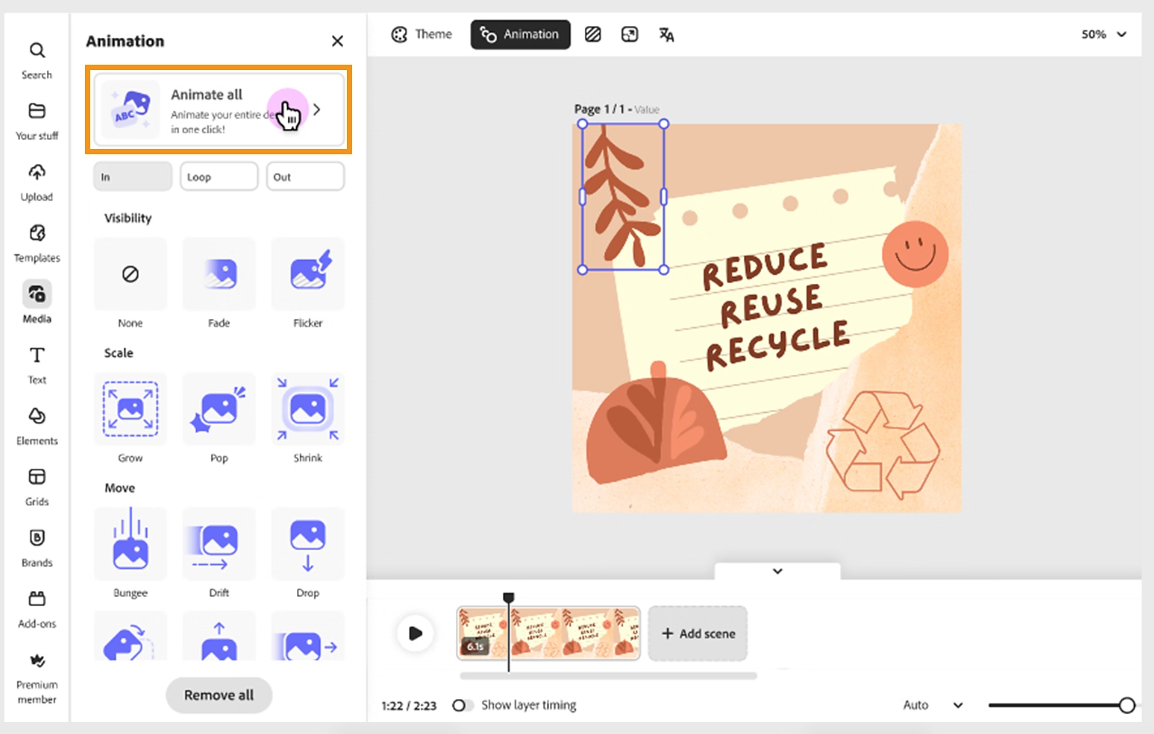

The AI—and the human touch—behind the new Animate All Feature in Adobe Express

Animate individual design elements or apply one-tap Animate all to your entire design with AI to ensure the most important elements stand out. Learn to customize animation types, adjust settings like direction and speed, and download your animated creations easily.

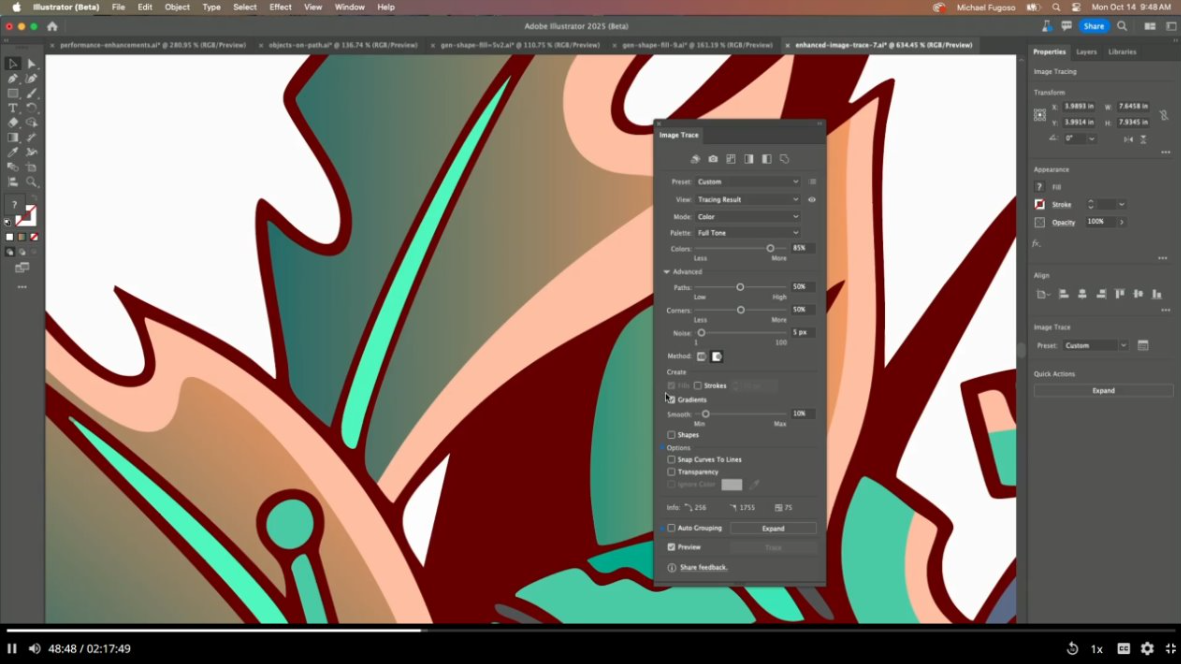

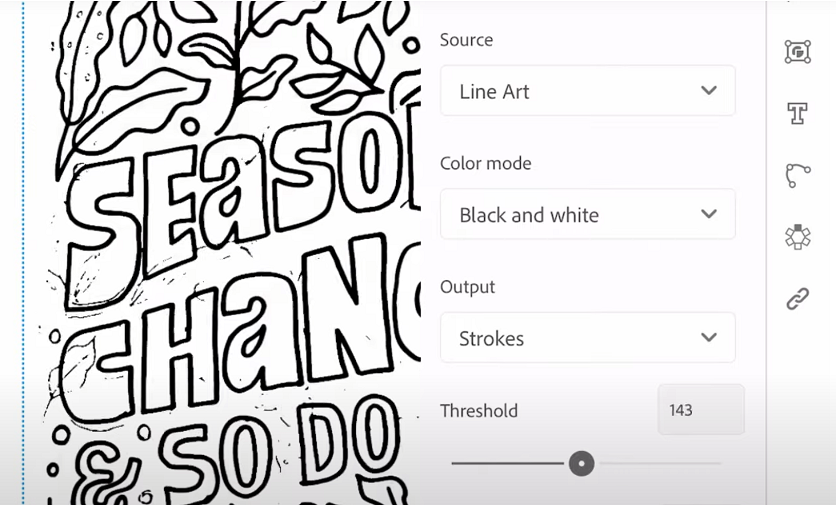

The enhanced Image Trace feature lets you create accurate traces with improved curves that closely match the original image.

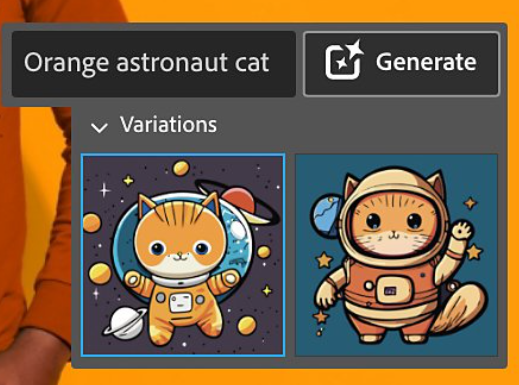

Mind Blowing Text to Vector in Adobe Illustrator!

I worked on a new generative AI technology to create vector graphics artwork from a text description. This work is part of a broad effort to model very difficult distributions, such as the space of beautiful images, and recent successes here have created powerful technology Adobe is working to apply across many different domains. This powerful tool creates segmented vector artwork from your text prompt. A lot of geometric work goes into ensuring that the resulting vectors are not just a flat set of tens of thousands of paths, but instead we try to generate segmented, editable geometric reporesentations that can be more easily manipulated by artists.

A.I. is Finally in Adobe Illustrator! - Generative Recolor

This generative model lets you recolor your artwork using only a text prompt as guidance. This relies upon previous image-based recoloring technology we had designed, and makes it very quick and easy to try out new coloring ideas.

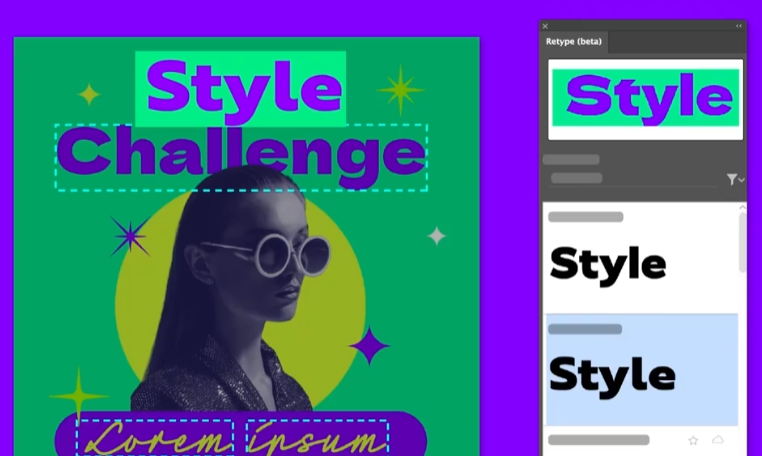

Identify Fonts using Retype (Beta) in Illustrator

Editing text within Illustrator is easy, but frequently you encounter either a rasterized image or an "outlined" font that is just a collection of Beziers and has lots its original connection to the underlying font. the Retype feature can detect the underlying font and let you easily edit the text. It even recreates text-on-path effects, for text that is not just a single line of characters.

Adobe Unveils New Generative AI Features for Photos, Videos, Audio, and 3D

This is an ongoing research project into directly generating vector graphics from image or text-based conditoning. Font glyphs are an excellent example of a regular structure that we can learn the patterns of to help create new glyphs that match the input image style.

Adobe’s mind-blowing AI turns napkin sketches into cartoons

Tracing, coloring, and shading vector artwork can be a very exacting and time-consuming process. Project Sunshine jump starts this process by helping artists to add vibrant colors and shading to their artwork. Artists can select a character or object and Project Sunshine will use machine learning to understand what the artwork represents and suggest many different ways to color it. Project Sunshine can also help bring shadows and highlights to artwork while letting the artist experiment with lighting from multiple directions.

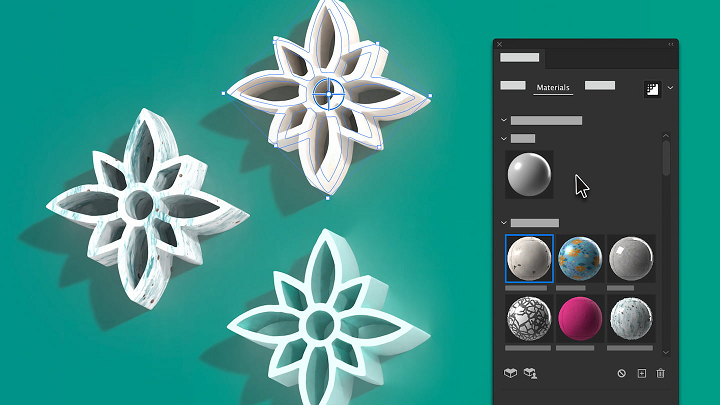

We added powerful 3D raytracing features to Illustrator. I helped write the Monte Carlo denoiser, based off our SIGGRAPH 2021 paper Interactive Monte Carlo Denoising using Affinity of Neural Features.

This technology takes your sketches, photographs, or other artwork and converts it into vector graphics. I trained a series of deep learning models that help the vectorize engine understand what parts of sketches to focus on, which lets it ignore aspects like shadows, smudges, grid lines, and other artifacts.

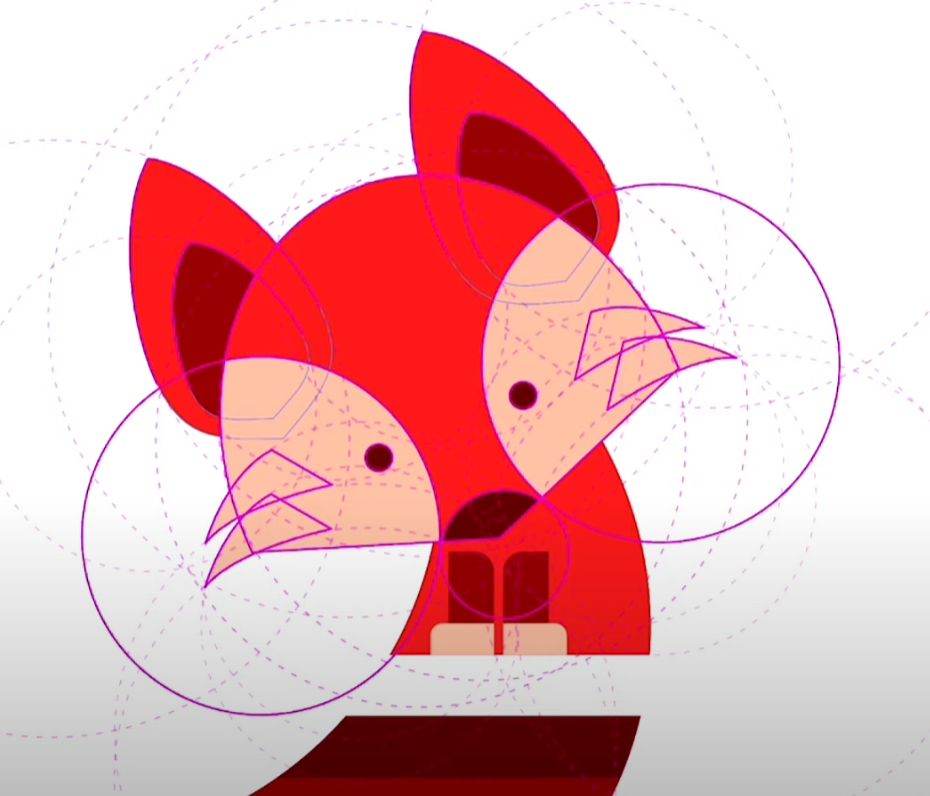

We built a tool to help artists easily manipulate vector artwork while perserving core shape properties such as right angles or perfect circles.

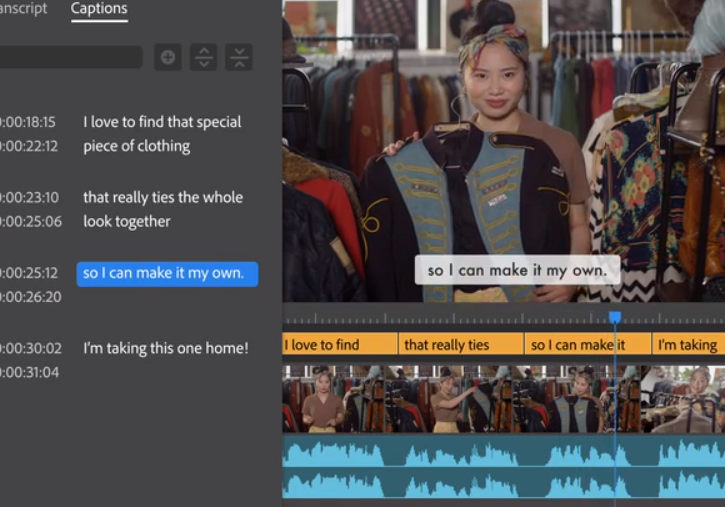

Automatically generate transcripts and add captions to your videos to improve accessibility and boost engagement with Speech to Text in Premiere Pro. I trained some of the core deep learning language models that determine how and where to split transcripts up into captions.

This technology lets you automatically recolor vector artwork to match the colors of a target photograph or palette.

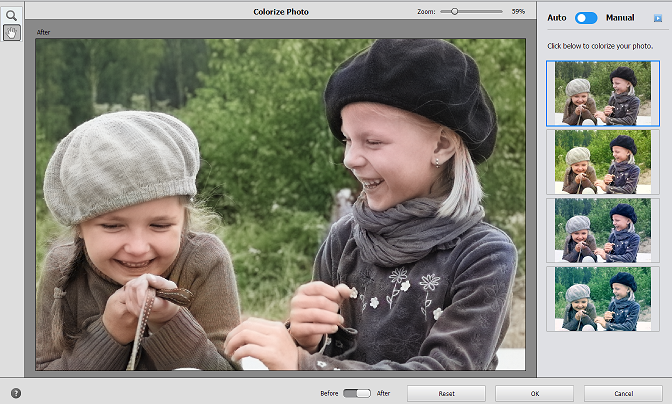

This tool uses a generative deep network to automatically colorize black and white photographs and can optionally incorporate user-guided coloring suggestions.

The freeform gradients tool naturally lets you control the diffusion of colors across your vector graphics and is one of many ways we are looking into making the Gradient Mesh tool easier and faster to use.

The renderer in Adobe Dimension was updated to use a deep network that efficiently reduces the image noise due to Monte-carlo sampling in its photorealistic renderer.

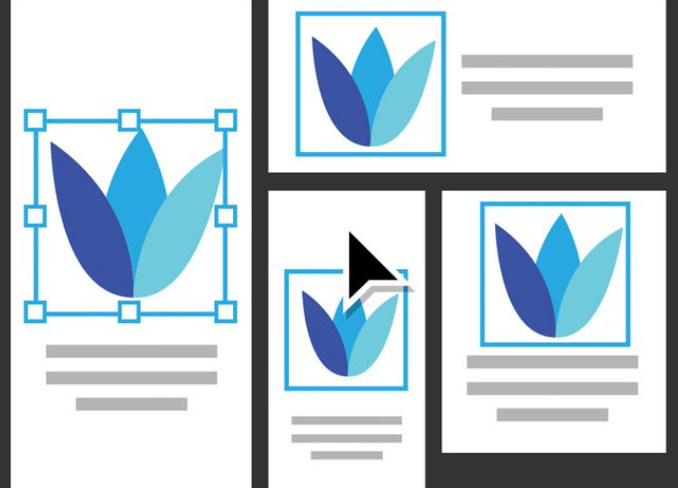

Often, your design contains multiple copies of similar objects, such as logos. If there is a need to make an edit to all such objects, you can use the global editing tool to edit all similar objects in the design in one step.

Puppet Warp lets you twist and distort parts of your artwork, such that the transformations appear natural. You can add, move, and rotate pins to seamlessly transform your artwork into different variations using the Puppet Warp tool in Illustrator.

Using deep learning, we can automatically estimate the perspective a photograph was taken from, making it much easier to composite 3D content into those scenes. This is an application of our CVPR 2017 paper on Deep Single Image Camera Calibration.